Introduction

Often when we think of the world of analysis we always think of numerical experimental data which then will have to be somehow elaborated and then subsequently displayed on a graph.

In reality, the analysis has no prejudice as regards the type of “data to be analyzed”, this in addition to the simple number, can also be an image, a sound or even a text. And it is precisely the analysis of the texts, and in particular of the language used, which we will discuss in this article.

In fact, the purpose of this article is to introduce the NLTK library, a Python library that allows Language Processing and analysis of texts in general. We will see how to install it on our computer and we will make the first approaches to better understand how it works and how it can be useful.

NLTK installation

Installing the library inside Python is very simple, since it is available as a PyPI package.

To install it on Python 3.x environments

$ pip3 install nltk

To install it on Python 2.7.x environments

$ pip install nltk

However, for this article and for all the articles that will be published on this site, the reference environment will always be Python 3.x.

Language Processing

Before we begin we introduce some key concepts that must be taken into account when dealing with Language Processing.

- millions of words as data

- context

- vocabulary

- language

- evolution of language

One of the main problems when dealing with the analysis of a text is the large amount of words (data) contained in it, often we talk about analyzing and comparing different works with thousands and thousands of pages (millions of words). Therefore, tools must be available that allow us to process this amount of data, and computer science really helps us.

In this regard, many analysis techniques have been developed which are mainly based on statistical tools and complex research algorithms. Many of these techniques and tools are available in the NLTK library.

Another important aspect in the analysis of texts is that the words present in a text do not occupy a random position, but are in a particular context. Context is a very important concept because it involves the relationship that a word has with the words that proceed and follow it.

In fact, those who analyze the texts know very well the importance of how words are inserted within a sentence. Often some words are coupled with other words to modify their meaning, to give a positive or negative context, or to change their meaning. . From here the bases for the emotional analysis of the text begin.

Furthermore, these ways of matching words vary from author to author, because the vocabulary used by each person is unique. So an analysis of the context within the text could confirm the authorship or not of a text by an author.

Another important aspect to consider is the language in which the text was written. In the world there are many languages used and each is based on its own vocabulary and a different way of how to structure sentences. A good analysis of the language will have to take it into account when it must be applied to different languages.

Another field of application of Language Processing is the evolution of language over time. In fact, not only does the use of a language and its vocabulary change from individual to individual, but it changes and evolves over time. Some words will be abandoned, replaced by new ones, often driven by social evolutions and new concepts introduced. But most vocabulary words change naturally over time, some consonants or vowels are modified, gradually changing the way they are pronounced.

There are many applications that Language Processing offers us, and many are the tools that come to us from the NLTK library. That’s why this library is really a powerful tool for all those who work in the text analysis sector.

Let’s start with NLTK

Now that we have installed the library, we can start working with some data already prepared, related to NLTK, which are already available for testing.

You can download this data directly from the Python interpreter.

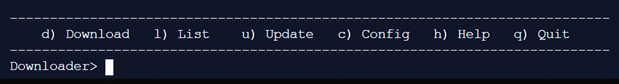

import nltk nltk.download()

A panel menu will open from the interpreter where you can select a lot of data (for example books) and extensions to use with the NLTK library.

Or if the graphic part is not enabled in your operating system, the text version will appear.

In questo caso scrivete solo:

d book

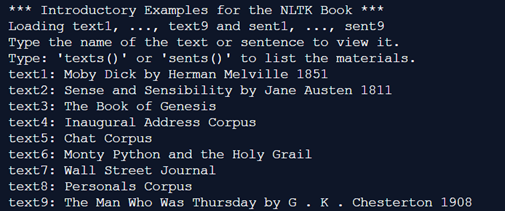

In both cases, download the NLTK book collection. You will see a list of literary works downloaded to your system.

To see the list of books just downloaded and available for our tests, simply write:

from nltk.book import *

And in a few seconds the complete list will appear.

As you can see, each book is identified with a text# variable.

So knowing the available texts the next time you can directly import the desired text.

from nltk.book import text3

The newly imported text (text3) is the book of Genesis in English (The book of Genesis). The text is in a format that can be easily manipulated by the library, that is, it is an array, in which each element is a string of characters. So it can be manipulated as such.

A certain element can be accessed by specifying its index in square brackets.

print(text3[143])

Or search for the index of the element containing a certain word.

print(text3.index('angels'))

As in this case, if a certain word is contained several times in the text, the corresponding index will be that of the first occurrence, that is, the element with the lowest index containing that word

As with all arrays, the slicing rules apply.

So if we want to print the first 40 words of the text, we can write:

print(text3[1:41])

We got all the elements contained in the first 40 elements of the array. If instead we want to have the array written in text form, this option is better:

print((' ').join(text3[1:41]))

47/5000We thus obtain a more readable line of text.

The dictionary

In the introductory part we saw that one of the fundamental concepts of language analysis is the dictionary. Let’s see a series of examples with which the NLTK library allows us to work concretely with this concept.

Each text is characterized by a particular set of words, which we will identify as a dictionary (vocabulary). This is often linked to the particular theme of the book and to the particular author who wrote it (this is true if the text is in the original language).

Let’s start with the simplest thing to do, to measure how many words the text under analysis is made of.

print(len(text3))

Getting.

The number we got is not exactly the number of words in the text, but a token. This is the technical term for a sequence of characters defined at the ends by two spaces. So they can be words, but also symbols, punctuation, emoticos, and so on. To better understand this, we can use the set () function. This function groups together all the tokens in a text by eliminating duplicates. If we then order the tokens present inside in alphabetical order, what we will get will be considered as our token dictionary.

print(sorted(set(text3)))

We will get an array with all the tokens present in the text in alphabetical order.

To know the size of the dictionary:

print(len(set(text3)))

An interesting metric that can be used in analyzing a text is its lexical richness, that is, how many more different terms are used in a text. This measurement can be obtained simply by calculating the ratio between the tokens in the dictionary and the tokens in the text (not the words 😉).

print(len(set(text3)) / len(text3))

These values relate to the entire dictionary present in a text, but it is also possible to approach the individual words (tokens). For example, we want to know how many times a word is present in a text. For example the word “angels” in the book of Genesis (text3). You can easily get this value with the method count().

print(text3.count("angels"))obtaining4

Frequency Distribution

Now that we understand the concept of dictionary, a useful function for our analyzes is the frequency distribution of words. That is, a function that tells us how much the dictionary words are present within the text by means of a simple count on which statistics can be made.

To achieve this, the NLTK library provides us with a simple FreqDist () function which, applied to a text, creates an object containing all the information on the word frequency of the text. Then there are a number of methods to then obtain the information within it.

For example, let’s see what are the 12 most frequent words in the book of Genesis using the most_common() method.

dist = nltk.FreqDist(text3) print(dist) print() print(dist.most_common(12))

As you can see from the code, distribution is an object. Furthermore, as we could easily guess, the 12 most frequent words (or better tokens) are punctuation characters, prepositions and conjunctions.

The longest words

Another type of research that can be thought of is that based on the length of the words. For example, we may be interested in knowing all words longer than 12 characters in a text. In this case, a set is first created (array dictionary containing all the words within a text). Then you scan element by element (by element) to select only those that meet the requirement of being longer than 12 characters. Here is the example code:

S = set(text3) longest = [i for i in S if len(i) > 12] print(sorted(longest)

By running it you will find the longest words in the book of Genesis.

The Context

Now that we have seen how to get and work on a dictionary, let’s introduce another series of examples that allow us to work on another very important concept in the analysis of language: the context of words.

Search for occurrences of a string in text

One of the basic operations that is performed most often is searching for a word in a text. In NLTK you can do this using the concordance () function.

For example, we want to see if the word “angels” is present in the book of Genesis.

text3.concordance("angels")We will get the following result:

As we can see there are 4 occurrences. Four different sentences are then shown. As you can see, the word “angels” appears in the center of each line, and before and after there are the words that precede or follow it in the text. This is called context. As we said earlier, this concept is very important in the analysis of language.

So concordance () allows us to see the words searched in their context.

From here, one more step can be taken with NLTK. For example … from the context analysis can you find words similar to the one you are looking for?

Let’s try using the similar () function.

We rewrite everything we have done programmatically (so that everything can be inserted into a program code and not interactively with the interpreter).

import nltk

nltk.download('book')

from nltk.book import text3

text3.similar("angels") We will get the following result

This is the list of words that appear in a context similar to the word “angels” and therefore can be related to it in some way and that perform a similar function in the text (in fact we see the word god).

This type of analysis does not serve to find synonyms for the word “angels” as much as to characterize the author and his dictionary (which differs from author to author). For example, in the genesis the word “angels” is used in the “sacred” meaning associating it with other words very common in the biblical and religious sense.

If instead we will analyze the same word in another text, for example Moby Dick (text1)

import nltk

nltk.download('book')

from nltk.book import text1

text1.concordance("angels")

print(" ")

text1.similar("angels") You will get

In this case, we will be surprised to discover that the word “angels”, for common sense related to the world of the sacred and religious, is more present in the book of Moby Dick than in Genesis. From the context of the 9 occurrences we see many quotations taken from the bible and from the religious theme in general. This suggests that the author of Moby Dick frequently uses biblical quotations, which keeps the word “angels” in great use even if the general context of the book also contains terms related to fishing and navigation. Just see the results of similar ().

Collocation

We now introduce two other concepts related to the context theme. A collocation is a sequence of words that often appear together in the text (this also in spoken jargon). For example “red wine” is a good example of location, while “blue wine” or “green wine” is not. Indeed these last two examples immediately make us stand out a sense of strangeness. This is because collocations are deeply rooted in our common sense of understanding language. We could often understand collocations as the association of multiple words that are often considered as specific to a unique concept in our mind. In other languages, these collocations are more evident since they are made explicit by grouping several words to create a new dictionary. German is an excellent example in this case: Rotwein is a term present in the dictionary (Rot = red) and (Wein = wine). In German there is a tendency to combine several words to create new ones.

text3.collocations()

Dispersion Plot

Another interesting operation is to see graphically the distribution in the text of some certain words. Sometimes it can be interesting to know if certain words are equally distributed in the text, or are found exclusively in a certain part of the text.

To do this we use the NLTK dispersion plot.

text2.dispertion_plot([“god”,”angels”,”genesis”,”Abraham”])